This year I decided to learn a bit about automation and infrastructure as code (IAC), and in this article, I would demonstrate some Terraform basics I have learned using the Nutanix Terraform Provider.

During this article, we will learn how to:

1. Install Terraform;

2. Connect Nutanix Terraform Provider;

3. Upload cloud image to the Nutanix Cluster;

4. Deploy a Nutanix Subnet, including IP-address Management;

5. Deploy a VM and connect it to the provisioned subnet;

6. Run a little cloud-init script to create a user in the deployed VM.

Disclaimer: I am just a beginner with Terraform. This post may contain inaccuracies or misinterpretations. Sorry for that!

What is Terraform? I will copy-paste the description from the official site because it perfectly describes the product:

HashiCorp Terraform is an infrastructure as code tool that lets you define both cloud and on-prem resources in human-readable configuration files that you can version, reuse, and share. You can then use a consistent workflow to provision and manage all of your infrastructure throughout its lifecycle. Terraform can manage low-level components like compute, storage, and networking resources, as well as high-level components like DNS entries and SaaS features.

Building an infrastructure requires three steps:

- Write: define desired infrastructure state in the configuration files;

- Plan: Terraform will show you the changes it will make to the infrastructure;

- Apply: Terraform provisions the infrastructure or updates it to the desired state.

During my learning experience with Terraform, I discovered its usefulness for my lab. How often do you build, install, test, and destroy virtual machines repeatedly? I do it on a regular basis. It can take a lot of time to build a lab environment from scratch. With Terraform, you can simplify routine lab tasks and reduce time to deploy. All you need is to spend some time to automate it.

Imagine how useful Terraform can be in production, especially when you need to deploy many infrastructure components and configure them. And all this even without starting to discuss the IAC approach and code as a source of truth for your cloud or on-premises infrastructure and how it could be useful.

Caveat: Sometimes automating something takes more effort than doing it manually. Automate everything may not be a good option here.

Now, when we know what Terraform is, what will we do next? In this article, we will:

- Install Terraform;

- Connect Nutanix Terraform Provider;

- Upload cloud image to the Nutanix Cluster;

- Deploy a Nutanix Subnet, including IP-address Management;

- Deploy a VM and connect it to the provisioned subnet;

- Run a little cloud-init script to create a user in the deployed VM.

Before we begin. To write files, I use Visual Studio Code and the HashiCorp Terraform plugin. Writing files will be much easier because it includes autocompletion and highlightning.

Installing terraform

This is a simple task. I use Rocky Linux 9 as an operating system:

[root@tf-host ~]# yum install -y yum-utils

[root@tf-host ~]# yum-config-manager --add-repo https://rpm.releases.hashicorp.com/RHEL/hashicorp.repo

[root@tf-host ~]# yum -y install terraform

[root@tf-host ~]# terraform -version

Terraform v1.10.4

on linux_amd64Using the commands above, we have added the HashiCorp yum repository and installed Terraform.

We can also enable autocompletion:

[root@tf-host ~]# touch ~/.bashrc

[root@tf-host ~]# terraform -install-autocompleteYou can use this page to find the installation method for your operating system.

Now, let’s create a directory and three files in the directory:

[root@tf-host ~]# mkdir ~/ntnx-tf-test/

[root@tf-host ~]# touch ~/ntnx-tf-test/main.tf

[root@tf-host ~]# touch ~/ntnx-tf-test/variables.tf

[root@tf-host ~]# touch ~/ntnx-tf-test/terraform.tfvars

[root@tf-host ~]# ls ~/ntnx-tf-test

main.tf terraform.tfvars variables.tfmain.tf is used for declaring our infrastructure, describing providers, and resources (more about what it is later);

variables.tf is using for declaring variables used in the project. Although you can hardcode your values in the main.tf, using variables will make your life easier in the future when you need to change something.

terraform.tfvars is a file that contains values for variables.

Using Terraform Providers

The first thing we need to understand is that Terraform itself does not manage remote infrastructure. Terraform uses providers.

Providers are responsible for interaction with remote APIs and exposing resources. For example, if you want to manage Nutanix (in our case), you need to use Nutanix Provider; if you want to manage VMware, you need VMware vSphere Provider.

At the moment of writing, there are about 5000 different providers. You can see all of them at https://registry.terraform.io/.

There are three tiers of providers:

1. Official: developed and maintained by HashiCorp;

2. Partner: maintained by third-party companies against their own APIs. Partners participate in HashiCorp Technology Partner Program;

3. Community: published by individuals or groups of maintainers.

To start working with the Nutanix Provider, on the Providers page, use the search bar at the top and find Nutanix.

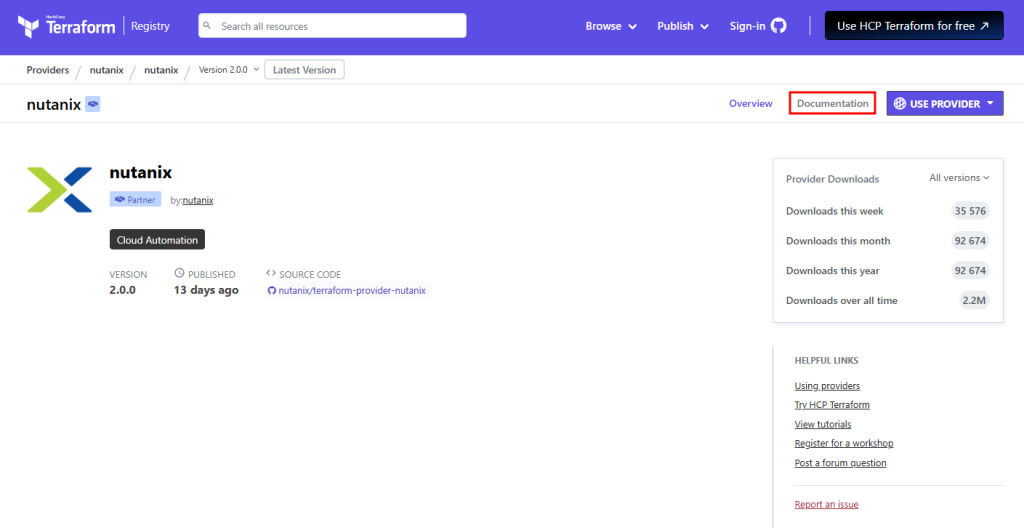

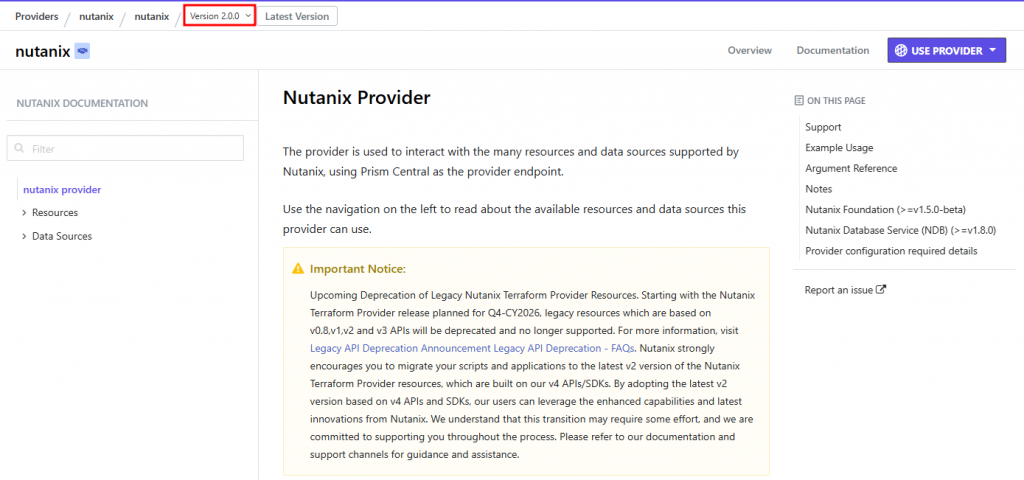

Click Documentation to get information on how to work with this provider:

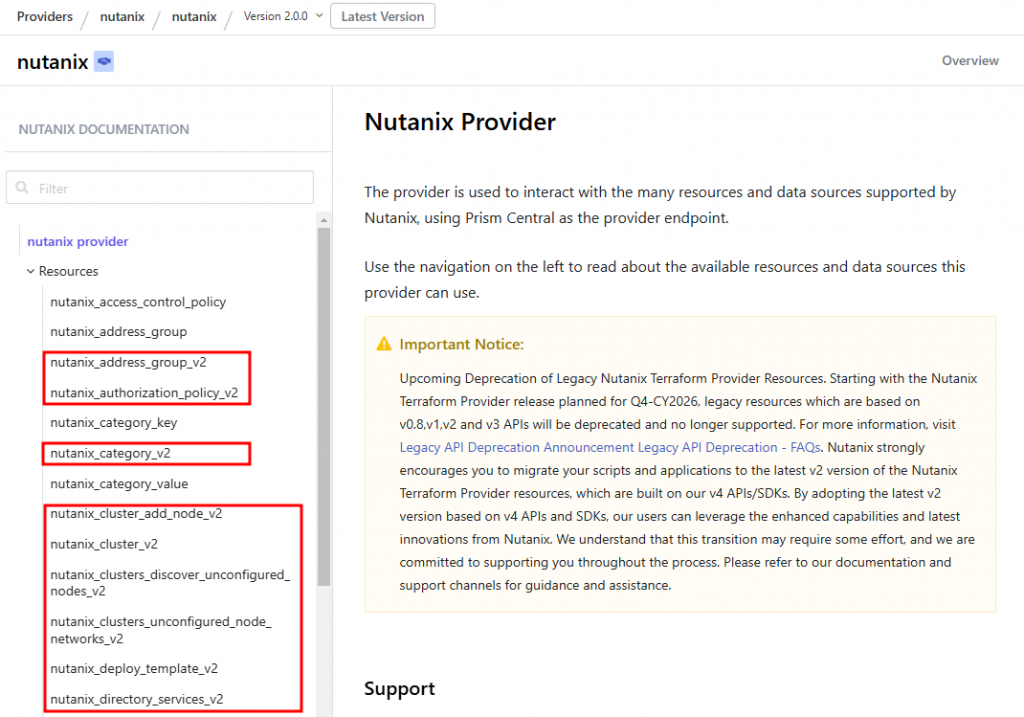

The documentation may vary from provider to provider, but in the case of the Nutanix Provider, we have documentation for resources (objects we can manage: VMs, networks, and so on) and data sources (data we can gather from Nutanix):

On the screenshot above, you may see an announcement from Nutanix about the deprecation of legacy APIs and Terraform Provider resources.

Because I use Community Edition with Prism Central PC2024.2, which does not have v4 APIs, I use old Terraform resources, but if you run AOS 7.0 and PC2024.3, which support v4 APIs, I recommend you check out the v2 resources and use them:

Now, when we briefly talked about Terraform Providers, let’s start to code, and the first thing we need to do is tell Terraform which provider we will use and connect to the Nutanix Prism Central instance.

First, let’s declare a few provider-related variables in the variables.tf file:

variable "pc" {

type = object({

address = string

username = string

password = string

})

}

variable "ntnx_cluster" {

type = string

}Variable “pc” will be used to connect to the Prism Central, and variable “ntnx_cluster” will be used to select a cluster for provisioning. You can read more about Variables here.

Now, let’s set the values in the terraform.tfvars file:

pc = {

address = "ntnx-ce-pc.vmik.lab"

username = "admin"

password = "Nutanix/4u"

}

ntnx_cluster = "ntnx-ce21"When preparation steps are finished. It’s time to declare a provider in the main.tf file:

terraform {

required_providers {

nutanix = {

source = "nutanix/nutanix"

version = "2.0.0"

}

}

}

provider "nutanix" {

endpoint = var.pc.address

username = var.pc.username

password = var.pc.password

insecure = true

wait_timeout = 30

}

data "nutanix_cluster" "cluster" {

name = var.ntnx_cluster

}As you can see, in the required_providers field, we set the Nutanix source and specified the provider version.

We provide connection data below in the provider section, which the provider will use. All those variables and values were defined before in the according files.

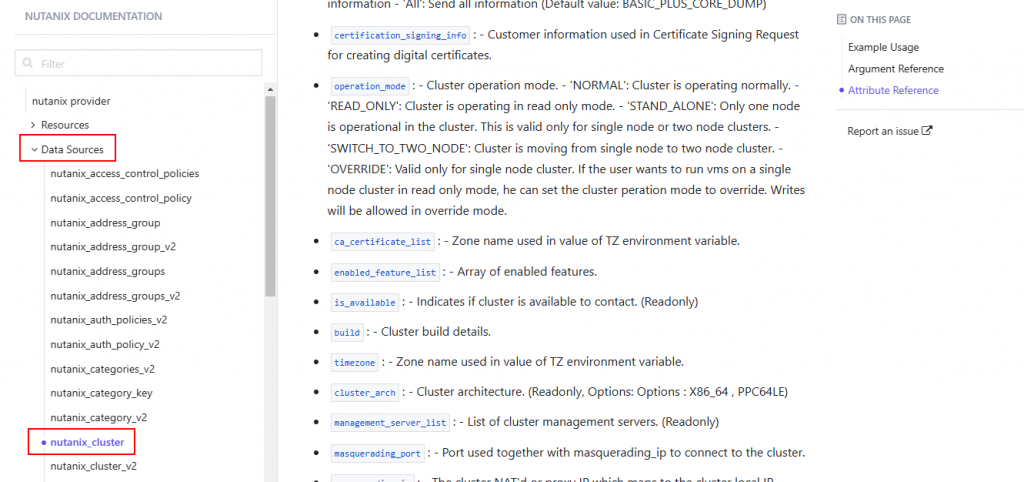

The last block, data, is used to gather the Nutanix cluster’s specific data. Next, we will use it to get the cluster’s UUID.

If you open Nutanix Provider documentation, you can find a data source named nutanix_cluster, which describes what we can gather:

When basic files are ready, return to the console and run the terraform init command to initialize the working directory, create supporting files, and install Terraform Provider:

[root@tf-host ntnx-tf-test]# terraform init

Initializing the backend...

Initializing provider plugins...

- Finding nutanix/nutanix versions matching "2.0.0"...

- Installing nutanix/nutanix v2.0.0...

- Installed nutanix/nutanix v2.0.0 (signed by a HashiCorp partner, key ID 8457185C2AB8CF55)

Partner and community providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://www.terraform.io/docs/cli/plugins/signing.html

Terraform has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that Terraform can guarantee to make the same selections by default when

you run "terraform init" in the future.

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.Now we can begin with declaring resources.

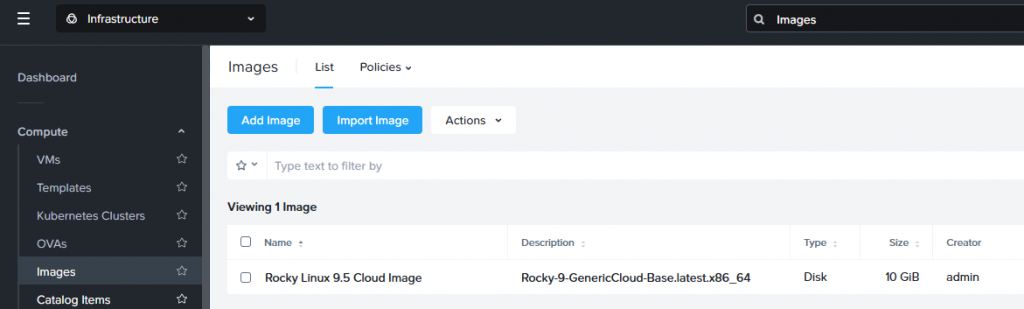

Uploading cloud image to the Nutanix Cluster

To deploy a virtual machine, I will use a Rocky Linux Cloud Image. Many Linux distros provide cloud images, and you can choose what you like.

Cloud Image is just a prepared virtual disk (in my case, qcow2) with a preinstalled operating system, which can be easily configured during deployment using cloud-init.

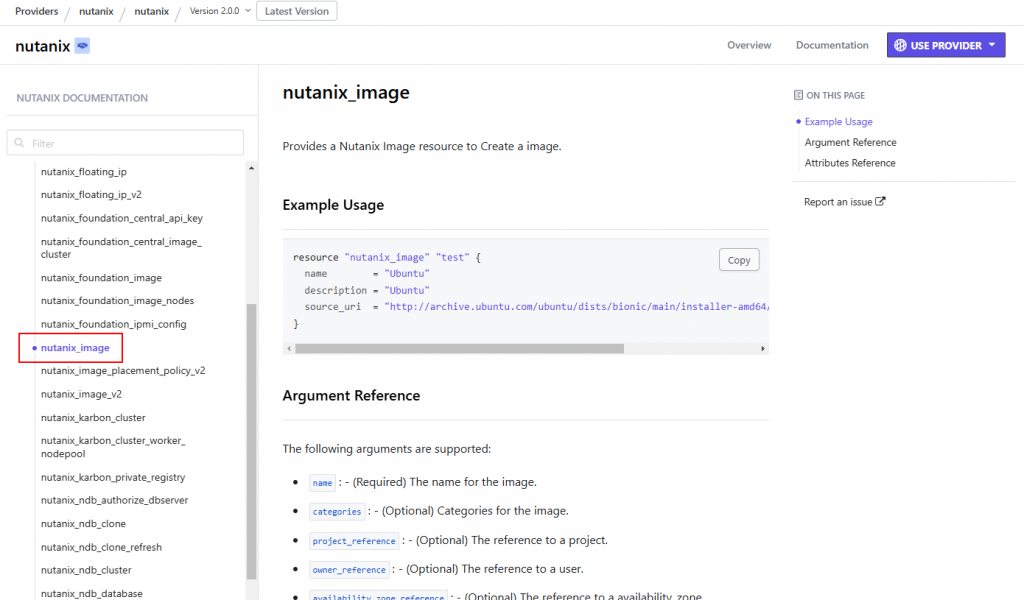

To download the cloud image and place it in the Nutanix cluster, we will create a first resource, nutanix_image.

Add this block to the end of the main.tf file:

resource "nutanix_image" "rl95_image" {

name = "Rocky Linux 9.5 Cloud Image"

description = "Rocky-9-GenericCloud-Base.latest.x86_64"

source_uri = "https://dl.rockylinux.org/pub/rocky/9/images/x86_64/Rocky-9-GenericCloud-LVM.latest.x86_64.qcow2"

}In the code block above, we use the resource nutanix_image and name rl95_image. If we need to use this resource in the further blocks, we should use a defined name.

As usual, you can find the information about the resource in the documentation and see what you can do with that:

After saving the file, run the terraform plan command. This is a great command, displaying which resources will be created, deleted, or updated:

[root@tf-host ntnx-tf-test]# terraform plan

...

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# nutanix_image.rl95_image will be created

+ resource "nutanix_image" "rl95_image"

...

Plan: 1 to add, 0 to change, 0 to destroy.If everything is ok, type terraform apply to apply the configuration:

[root@tf-host ntnx-tf-test]# terraform applyYou will be asked to accept the changes:

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yesImage will start to download:

nutanix_image.rl95_image: Creating...

nutanix_image.rl95_image: Still creating... [10s elapsed]

nutanix_image.rl95_image: Still creating... [20s elapsed]

nutanix_image.rl95_image: Still creating... [30s elapsed]

nutanix_image.rl95_image: Still creating... [40s elapsed]

nutanix_image.rl95_image: Creation complete after 3m6s [id=9c16f71a-2abf-4082-99f5-356987414123]

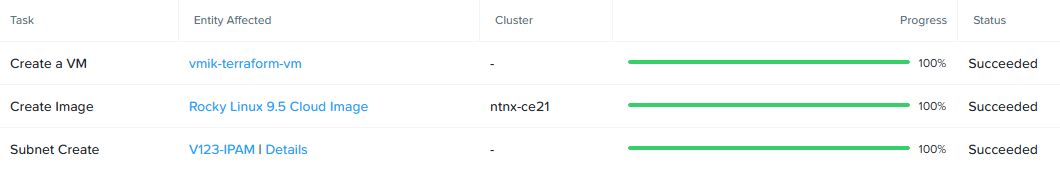

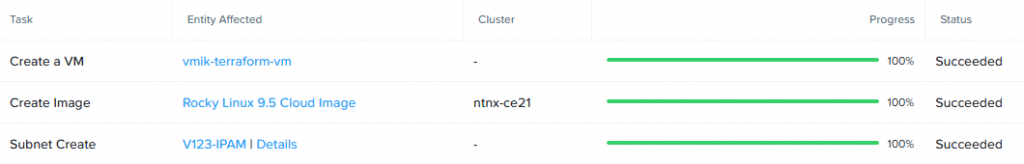

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.Congratulations! You created your first resource using Terraform:

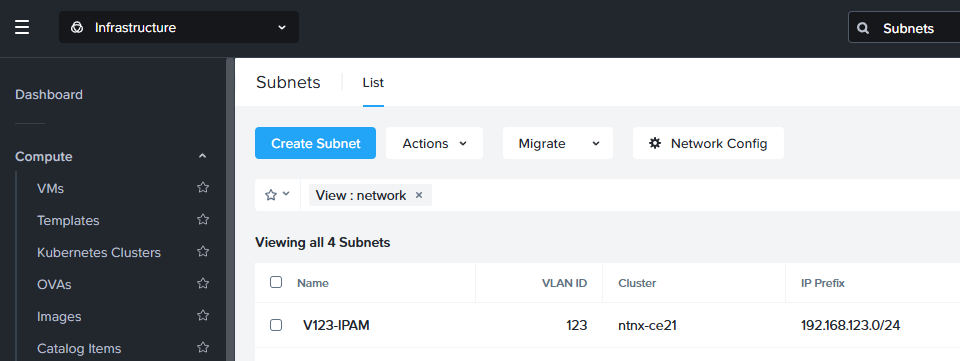

Deploying a Nutanix Subnet, including IP-address Management

In this example, we will create a subnet with enabled IP address management that allows us to control IP address assignment within the network.

This configuration is a bit more complicated than a simple VLAN because we need to provide some DHCP-like settings, including an IP pool with addresses allocated to virtual machines.

To create a subnet using Nutanix Provider, we will use a nutanix_subnet resource.

First, we add a new variable to the variables.tf:

variable "ipam_subnet" {

type = object({

name = string

vlan_id = number

prefix = number

subnet_ip = string

gateway = string

pool_range = list(string)

dns = list(string)

search_domain = list(string)

})

}In addition, add values to the terraform.tfvars file:

ipam_subnet = {

name = "V123-IPAM"

vlan_id = "123"

prefix = "24"

subnet_ip = "192.168.123.0"

gateway = "192.168.123.1"

pool_range = ["192.168.123.21 192.168.123.31"]

dns = ["192.168.123.10"]

search_domain = ["vmik.lab"]

}If you were familiar with IPAM subnets before, you may notice that those settings are required to create a managed subnet:

The last step is to declare a subnet resource in the main.tf file:

resource "nutanix_subnet" "project_subnet" {

cluster_uuid = data.nutanix_cluster.cluster.id

name = var.ipam_subnet.name

vlan_id = var.ipam_subnet.vlan_id

subnet_type = "VLAN"

prefix_length = var.ipam_subnet.prefix

ip_config_pool_list_ranges = var.ipam_subnet.pool_range

default_gateway_ip = var.ipam_subnet.gateway

subnet_ip = var.ipam_subnet.subnet_ip

dhcp_domain_name_server_list = var.ipam_subnet.dns

dhcp_domain_search_list = var.ipam_subnet.search_domain

}Everything should be clear here. We are using the resource nutanix_subnet and naming it project_subnet.

In the code, we are defining the cluster where we want to create this subnet. Do you remember the data block we used in the beginning? Now, we are obtaining the Nutanix Cluster UUID through previously collected data.

All other settings are used to describe the subnet. The same settings you are defining in the Prism Interface.

After setting the files, run terraform plan:

[root@tf-host ntnx-tf-test]# terraform plan

...

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# nutanix_subnet.project_subnet will be created

+ resource "nutanix_subnet" "project_subnet"

...We can see that Terraform will create a requested subnet. Let’s use terraform apply to create the network:

[root@tf-host ntnx-tf-test]# terraform apply

...

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# nutanix_subnet.project_subnet will be created

...

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

...

nutanix_subnet.project_subnet: Creation complete after 12s [id=58459a1a-0e3a-48b9-8229-1dcf03c608c2]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.The subnet is ready:

The last step is to create a virtual machine from the uploaded image and connect it to the network.

Deploying a VM and connecting it to the provisioned subnet

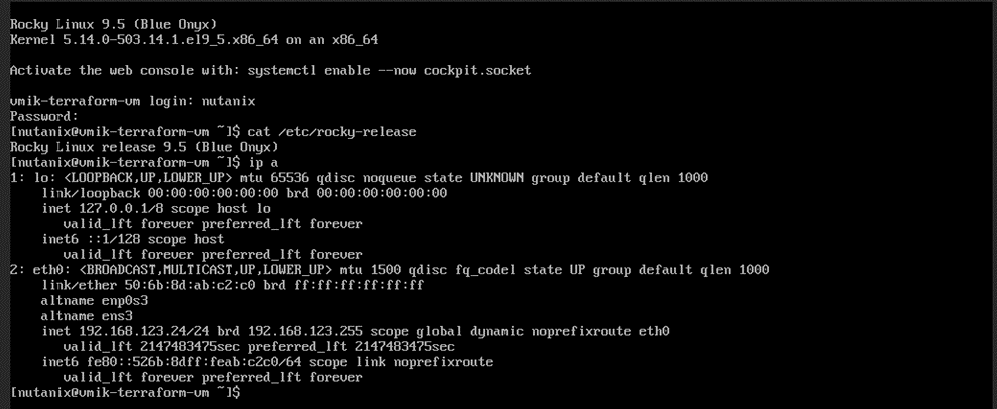

By default, the Rocky Linux cloud image does not have any default users and passwords. To solve this, during VM creation, we will apply a simple cloud-init script to create nutanix user and set a beloved password, nutanix/4u.

The cloud-init config looks like that:

#cloud-config

users:

- name: nutanix

sudo: ['ALL=(ALL) NOPASSWD:ALL']

lock-passwd: false

passwd: $6$4guEcDvX$HBHMFKXp4x/Eutj0OW5JGC6f1toudbYs.q.WkvXGbUxUTzNcHawKRRwrPehIxSXHVc70jFOp3yb8yZgjGUuET.To create a VM, we will use nutanix_virtual_machine resource.

First, we will declare one more variable in the variables.tf file:

variable "guest_init_script" {

type = string

}This variable is used to store the cloud-init script, which will be applied to the VM, but first it must be encoded to base64.

To do that, you can use base64 in your terminal, but I prefer https://www.base64encode.org/ site.

Update terraform.tfvars file with the new variable:

guest_init_script = "I2Nsb3VkLWNvbmZpZwp1c2VyczoKICAtIG5hbWU6IG51dGFuaXgKICAgIHN1ZG86IFsnQUxMPShBTEwpIE5PUEFTU1dEOkFMTCddCiAgICBsb2NrLXBhc3N3ZDogZmFsc2UKICAgIHBhc3N3ZDogJDYkNGd1RWNEdlgkSEJITUZLWHA0eC9FdXRqME9XNUpHQzZmMXRvdWRiWXMucS5Xa3ZYR2JVeFVUek5jSGF3S1JSd3JQZWhJeFNYSFZjNzBqRk9wM3liOHlaZ2pHVXVFVC4="Once we have set the variables, we declare the VM in the main.tf file:

resource "nutanix_virtual_machine" "vm" {

name = "vmik-terraform-vm"

cluster_uuid = data.nutanix_cluster.cluster.id

num_vcpus_per_socket = "1"

num_sockets = "1"

memory_size_mib = 2048

disk_list {

data_source_reference = {

kind = "image"

uuid = nutanix_image.rl95_image.id

}

}

nic_list {

nic_type = "NORMAL_NIC"

model = "VIRTIO"

subnet_uuid = nutanix_subnet.project_subnet.id

}

guest_customization_cloud_init_user_data = var.guest_init_script

}We use the disk_lists block to declare all the disks in the virtual machine. In this example, only one disk exists — a clone from the existing Rocky Linux image. If you want to add one more disk, you can refer to the resource documentation. It should be simple.

The nic_list block is used to declare a VM’s network interface.

Things to mention: in the example we are referring to already created resources: nutanix_image.rl95_image.id and nutanix_subnet.project_subnet.id.

terraform plan will display that a new resource will be created:

[root@tf-host ntnx-tf-test]# terraform plan

...

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# nutanix_virtual_machine.vm will be created

...And terraform apply will create the VM:

[root@tf-host ntnx-tf-test]# terraform apply

...

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# nutanix_virtual_machine.vm will be created

...

Plan: 1 to add, 0 to change, 0 to destroy.

...

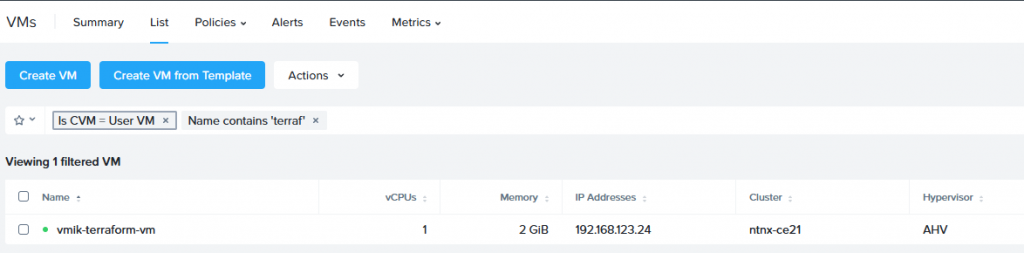

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.We created a new virtual machine with the necessary specifications:

You can see that VM is connected to the network and received an IP address provided by the created subnet.

The VM is ready to use:

This is how easily we can create a simple infrastructure using Terraform and the Nutanix Provider.

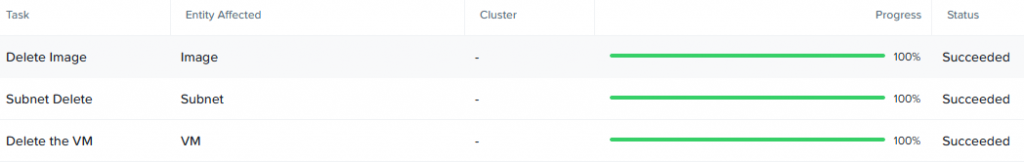

One more command to mention is terraform destroy. It will delete all that Terraform created before, so use it carefully and only when you know what you are doing.

[root@tf-host ntnx-tf-test]# terraform destroy

Terraform will perform the following actions:

# nutanix_image.rl95_image will be destroyed

# nutanix_subnet.project_subnet will be destroyed

# nutanix_virtual_machine.vm will be destroyed

Plan: 0 to add, 0 to change, 3 to destroy.And this is what you will see in Prism Central:

The beauty of Terraform is that if you use terraform apply again, it will build all resources (except user data for sure):

The last I will post the whole Terraform files

main.tf

#Defining providers

terraform {

required_providers {

nutanix = {

source = "nutanix/nutanix"

version = "2.0.0"

}

}

}

provider "nutanix" {

endpoint = var.pc.address

username = var.pc.username

password = var.pc.password

insecure = true

wait_timeout = 30

}

data "nutanix_cluster" "cluster" {

name = var.ntnx_cluster

}

#Uploading an Image to the cluster

resource "nutanix_image" "rl95_image" {

name = "Rocky Linux 9.5 Cloud Image"

description = "Rocky-9-GenericCloud-Base.latest.x86_64"

source_uri = "https://dl.rockylinux.org/pub/rocky/9/images/x86_64/Rocky-9-GenericCloud-LVM.latest.x86_64.qcow2"

}

#Creating a managed subnet in VLAN 123, using IPAM

resource "nutanix_subnet" "project_subnet" {

cluster_uuid = data.nutanix_cluster.cluster.id

name = var.ipam_subnet.name

vlan_id = var.ipam_subnet.vlan_id

subnet_type = "VLAN"

prefix_length = var.ipam_subnet.prefix

ip_config_pool_list_ranges = var.ipam_subnet.pool_range

default_gateway_ip = var.ipam_subnet.gateway

subnet_ip = var.ipam_subnet.subnet_ip

dhcp_domain_name_server_list = var.ipam_subnet.dns

dhcp_domain_search_list = var.ipam_subnet.search_domain

}

#Creating a virtual machine from the uploaded image and connecting it to the created subnet

resource "nutanix_virtual_machine" "vm" {

name = "vmik-terraform-vm"

cluster_uuid = data.nutanix_cluster.cluster.id

num_vcpus_per_socket = "1"

num_sockets = "1"

memory_size_mib = 2048

disk_list {

data_source_reference = {

kind = "image"

uuid = nutanix_image.rl95_image.id

}

}

nic_list {

nic_type = "NORMAL_NIC"

model = "VIRTIO"

subnet_uuid = nutanix_subnet.project_subnet.id

}

guest_customization_cloud_init_user_data = var.guest_init_script

}variables.tf

#Prism Central connection settings

variable "pc" {

type = object({

address = string

username = string

password = string

})

}

#Nutanix cluster to operate (must be connected to the Prism Central)

variable "ntnx_cluster" {

type = string

}

#Subnet settings

variable "ipam_subnet" {

type = object({

name = string

vlan_id = number

prefix = number

subnet_ip = string

gateway = string

pool_range = list(string)

dns = list(string)

search_domain = list(string)

})

}

#VM-specific settings

variable "guest_init_script" {

type = string

}terraform.tfvars

#Prism Central connection settings

pc = {

address = "ntnx-ce-pc.vmik.lab"

username = "admin"

password = "Nutanix/4u"

}

#Nutanix cluster to operate (must be connected to the Prism Central)

ntnx_cluster = "ntnx-ce21"

#Subnet settings

ipam_subnet = {

name = "V123-IPAM"

vlan_id = "123"

prefix = "24"

subnet_ip = "192.168.123.0"

gateway = "192.168.123.1"

pool_range = ["192.168.123.21 192.168.123.31"]

dns = ["192.168.123.10"]

search_domain = ["vmik.lab"]

}

#VM-specific settings

guest_init_script = "I2Nsb3VkLWNvbmZpZwp1c2VyczoKICAtIG5hbWU6IG51dGFuaXgKICAgIHN1ZG86IFsnQUxMPShBTEwpIE5PUEFTU1dEOkFMTCddCiAgICBsb2NrLXBhc3N3ZDogZmFsc2UKICAgIHBhc3N3ZDogJDYkNGd1RWNEdlgkSEJITUZLWHA0eC9FdXRqME9XNUpHQzZmMXRvdWRiWXMucS5Xa3ZYR2JVeFVUek5jSGF3S1JSd3JQZWhJeFNYSFZjNzBqRk9wM3liOHlaZ2pHVXVFVC4="In conclusion

I like Terraform and what it can provide for its users. I consider playing with it more in the future with different Nutanix resources and different providers.

I hope you like this post. And don’t forget — I’m just a beginner.

![]()