This is the third article in the NKE series. Before, I wrote about how to deploy Kubernetes clusters using NKE and how to add or remove workers.

In this article, we will cover update procedures and look at how easily we can update the host OS version and Kubernetes using NKE.

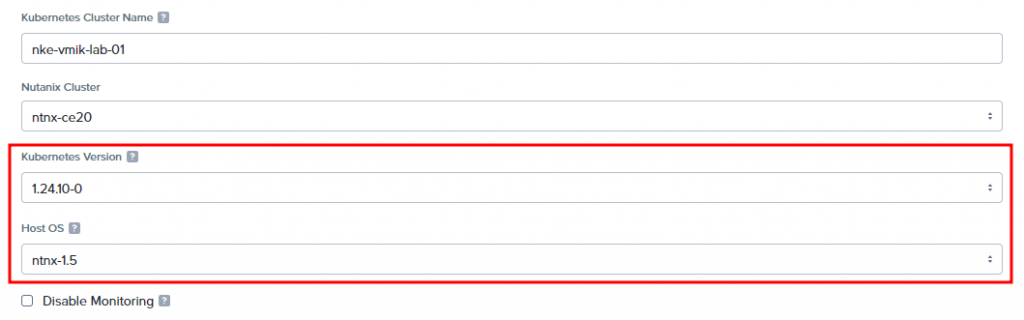

To demonstrate the update procedure, I’ve deployed an outdated cluster, using outdated versions of Kubernetes and OS images as well.

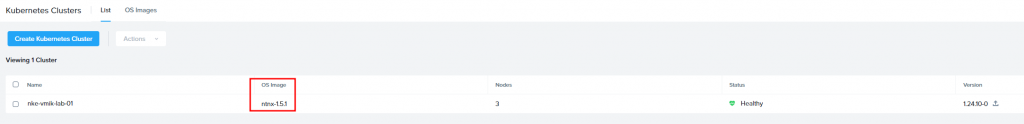

In this deployment, I use Kubernetes version 1.24.10-0 instead of 1.25 and the host OS image ntnx-1.5, while version 1.5.1 is available. By the way, at the moment of writing, NKE version 2.10 (2.8 in this article) supports Kubernetes versions 1.27 and 1.28.

On the clusters page, you may see icons indicating that updates are available for the host OS and Kubernetes:

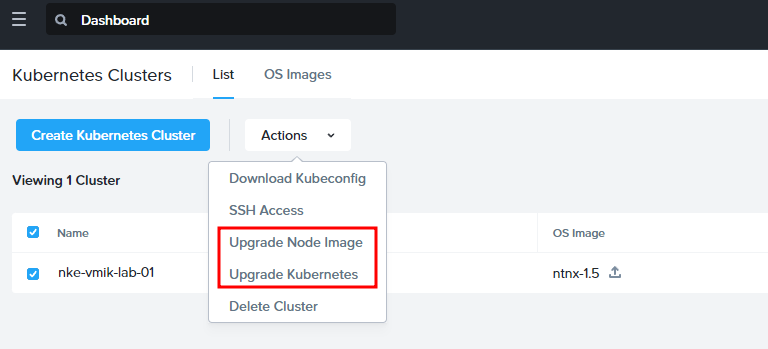

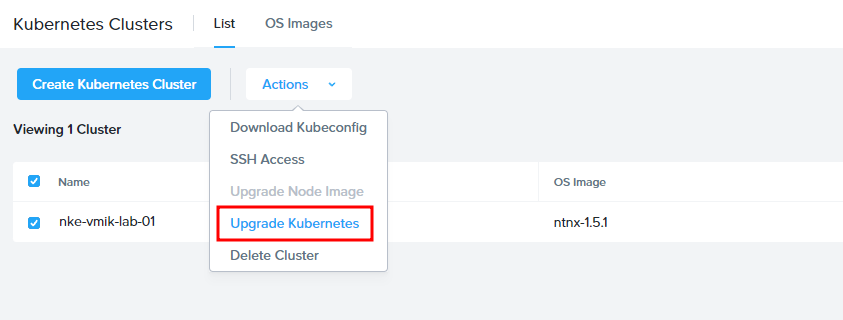

To begin an upgrade, we can click the corresponding icon after OS Image or Kubernetes version, or we can select a cluster and begin the update from the actions menu:

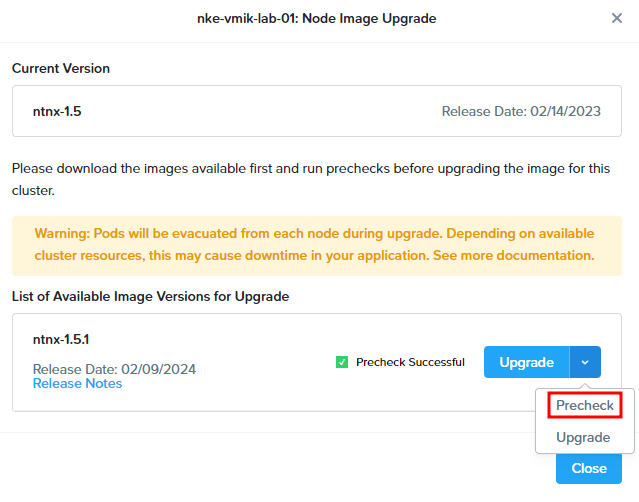

Let’s upgrade the node image first. It’s recommended to proceed with a pre-check before upgrade:

Before upgrading, let’s see the cluster. In my case, this is a three-node Kubernetes cluster:

[root@k8s-admin /]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

nke-vmik-lab-01-9bc233-master-0 Ready control-plane,master 128m v1.24.10

nke-vmik-lab-01-9bc233-master-1 Ready control-plane,master 128m v1.24.10

nke-vmik-lab-01-9bc233-worker-0 Ready node 125m v1.24.10

nke-vmik-lab-01-9bc233-worker-1 Ready node 125m v1.24.10

nke-vmik-lab-01-9bc233-worker-2 Ready node 125m v1.24.10And a deployment of nginx containers:

[root@k8s-admin /]# kubectl get pods -n vmik-test -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-544dc8b7c4-5qvkw 1/1 Running 0 137m 172.20.83.196 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-8lstx 1/1 Running 0 137m 172.20.185.136 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-8x2gk 1/1 Running 0 137m 172.20.83.198 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-fz285 1/1 Running 0 137m 172.20.185.134 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-gqh2n 1/1 Running 0 137m 172.20.83.197 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-hkzfl 1/1 Running 0 137m 172.20.185.135 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-k9zgb 1/1 Running 0 137m 172.20.174.200 nke-vmik-lab-01-9bc233-worker-1 <none> <none>

nginx-deployment-544dc8b7c4-rfghw 1/1 Running 0 137m 172.20.174.201 nke-vmik-lab-01-9bc233-worker-1 <none> <none>

nginx-deployment-544dc8b7c4-tdzs7 1/1 Running 0 137m 172.20.174.199 nke-vmik-lab-01-9bc233-worker-1 <none> <none>

nginx-deployment-544dc8b7c4-zn72t 1/1 Running 0 137m 172.20.174.198 nke-vmik-lab-01-9bc233-worker-1 <none> <none>Now click Upgrade button, the status will be changed to “Upgrading”:

The upgrade order is etcd nodes, master nodes, and worker nodes.

During an upgrade, it’s natural that nodes will be evicted and pods will be restarted on other nodes. All VMs in the cluster will be updated one by one, and you should not expect a downtime of the cluster.

During the control-plane update, you can expect a short KubeAPI downtime, if you’re using an active-standby configuration.

During the worker upgrade, you may see, that NKE disables scheduling on the nodes:

[root@k8s-admin /]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

nke-vmik-lab-01-9bc233-master-0 Ready control-plane,master 152m v1.24.10

nke-vmik-lab-01-9bc233-master-1 Ready control-plane,master 151m v1.24.10

nke-vmik-lab-01-9bc233-worker-0 Ready node 149m v1.24.10

nke-vmik-lab-01-9bc233-worker-1 Ready,SchedulingDisabled node 149m v1.24.10

nke-vmik-lab-01-9bc233-worker-2 Ready node 149m v1.24.10And pods are restarted on the other workers:

[root@k8s-admin /]# kubectl get pods -n vmik-test -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-544dc8b7c4-5qvkw 1/1 Running 0 144m 172.20.83.196 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-64pzl 1/1 Running 0 3m58s 172.20.83.199 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-7wf62 1/1 Running 0 104s 172.20.185.142 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-8x2gk 1/1 Running 0 144m 172.20.83.198 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-99cgg 1/1 Running 0 3m58s 172.20.83.201 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-gqh2n 1/1 Running 0 144m 172.20.83.197 nke-vmik-lab-01-9bc233-worker-2 <none> <none>

nginx-deployment-544dc8b7c4-hht22 1/1 Running 0 104s 172.20.185.139 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-jxsp9 1/1 Running 0 104s 172.20.185.143 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-kgmjr 1/1 Running 0 103s 172.20.185.146 nke-vmik-lab-01-9bc233-worker-0 <none> <none>

nginx-deployment-544dc8b7c4-kqcmc 1/1 Running 0 104s 172.20.185.145 nke-vmik-lab-01-9bc233-worker-0 <none> <none>In my lab environment, the overall procedure took about 15 minutes, but in a production environment, it will take more, because it requires restarting more production containers and clusters include more nodes.

Now we can see that the host OS image is updated:

Let’s upgrade the Kubernetes version in the same way. Select the cluster, click actions, and Upgrade Kubernetes:

You may notice, that “Upgrade Node Image” is grayed out because there are no versions available.

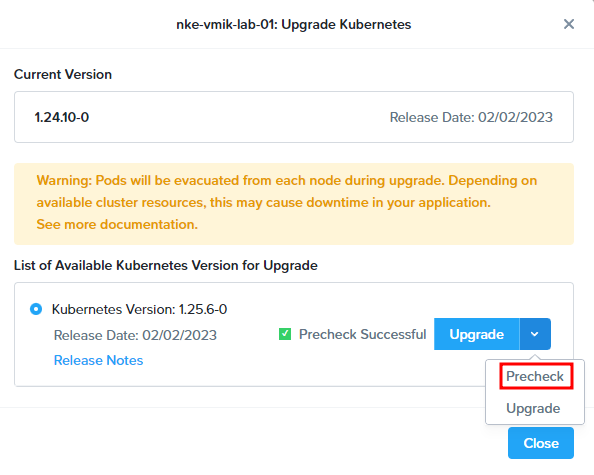

In the opened window, select the Kubernetes version and run pre-checks before upgrading:

Click Upgrade if the pre-check is successful. An upgrade task will be created, and you can monitor the process:

The procedure is similar to the OS image upgrade. The pods are restarting on the other nodes in the cluster, and the Kubernetes version is upgrading.

[root@k8s-admin /]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

nke-vmik-lab-01-9bc233-master-0 Ready control-plane,master 168m v1.25.6

nke-vmik-lab-01-9bc233-master-1 Ready control-plane,master 168m v1.25.6

nke-vmik-lab-01-9bc233-worker-0 Ready,SchedulingDisabled node 166m v1.24.10

nke-vmik-lab-01-9bc233-worker-1 Ready node 166m v1.25.6

nke-vmik-lab-01-9bc233-worker-2 Ready node 166m v1.25.6In my test environment, this procedure took about 10 minutes, but you can expect more, depending on the size of the cluster and workloads.

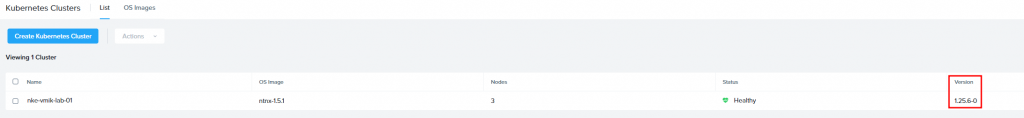

The cluster is upgraded:

[root@k8s-admin /]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

nke-vmik-lab-01-9bc233-master-0 Ready control-plane,master 173m v1.25.6

nke-vmik-lab-01-9bc233-master-1 Ready control-plane,master 173m v1.25.6

nke-vmik-lab-01-9bc233-worker-0 Ready node 170m v1.25.6

nke-vmik-lab-01-9bc233-worker-1 Ready node 170m v1.25.6

nke-vmik-lab-01-9bc233-worker-2 Ready node 170m v1.25.6In conclusion:

This is how easily we can update the host OS and Kubernetes using NKE. Don’t forget to keep your clusters updated, and notice that sometimes it’s not possible to upgrade to the latest available version if you’re running a super old one.

Also, if you’re running an outdated Kubernetes cluster, you can face a problem when it’s not permitted to update NKE itself, and you need to update Kubernetes first.

![]()